Donoghue and the moment that changed the field

This week Professor John Donoghue — the creator first brain chip behind the BrainGate research programme — told broadcasters that brain–computer interfaces (BCIs) have reached a "tipping point". The claim lands where engineering progress, urgent clinical need and an expanding set of commercial teams are lining up: tiny electrode arrays, faster algorithms and new implant designs are finally being tested in people rather than only in laboratory models. For patients paralysed by spinal cord injury or stroke, the promise is tangible; for everyone else, the reality raises questions about privacy, consent and who will set the rules for neural data.

creator first brain chip — technical roots and early experiments

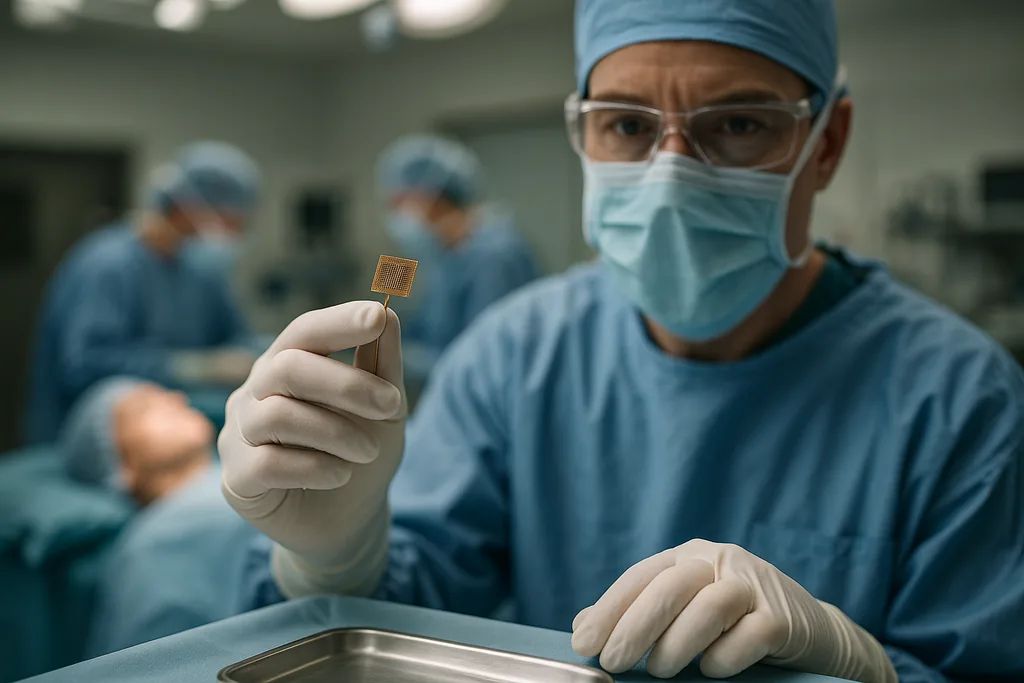

When people ask "what is a brain chip and how does it work?" they are usually referring to an invasive implant: a compact array of electrodes placed on or in brain tissue that senses electrical activity from neurons. Those analog signals are amplified, digitised and fed into software that decodes patterns of neural firing into commands — moving a cursor, selecting letters, or driving a robotic arm. The key components are the electrode interface, low‑noise electronics, signal processing and machine‑learning decoders that map neural patterns to intent.

John Donoghue and colleagues demonstrated the potential of this approach more than two decades ago with an early BrainGate system that recorded activity from motor cortex in people with severe paralysis. That work answered a fundamental question: are the cortical signals that drive movement preserved even after loss of peripheral function? The answer was yes — and once researchers could reliably pick up those signals, the door opened to clinically useful decoding. Early demonstrations translated those spikes into cursor motion and simple prosthetic control; they showed that targeted electrodes and clever decoding could restore function a user thought lost.

Clinical push and engineering barriers

The field has evolved from bench experiments to a growing number of human trials. Several companies and academic groups now pursue implantable systems that are smaller, more power‑efficient and designed for longer residency in the body. But engineering hurdles remain decisive for regulatory approval: the device must not provoke chronic infection, must avoid damaging tissue over years, and must control heat dissipation — the brain tolerates only a degree or two of local temperature rise before harm occurs. Longevity of the interface is another concern; electrodes that work well for weeks can degrade over months or years as the brain forms scar tissue around foreign materials.

These constraints help explain why most work today focuses on medical indications with clear benefit–risk tradeoffs: restoring communication, enabling basic limb control, or giving paralyzed patients independence. The clinical bar for elective, consumer use is much higher, because tolerance for surgical risks is far lower when the benefit is convenience rather than restored health.

creator first brain chip — why experts call it a tipping point

Experts point to several converging trends that justify talk of a tipping point. First, materials science and microfabrication have produced electrode arrays that are denser and more biocompatible than before. Second, machine‑learning decoders have become substantially better at extracting intent from noisy neural signals, enabling richer control with fewer electrodes. Third, systems integration — combining sensors, low‑power electronics and wireless telemetry — now fits into packages that can be implanted and maintained for longer periods. Put together, these advances have moved the technology from fragile demonstrations to systems that clinicians feel able to test in small human cohorts.

Donoghue and other pioneers also highlight a pragmatic driver: clinical demand. There are millions of people worldwide with paralysis, locked‑in syndrome or severe motor deficits who stand to benefit from even modest gains in communication or mobility. That unmet need accelerates investment and regulatory attention, which in turn pulls engineering forward faster than a purely academic cycle would.

Privacy, security and ethical risks

Even as the systems become technically viable, the ethical and social questions multiply. A central worry is neural data protection: what counts as a person’s thoughts or intentions, who owns the signals recorded from a brain, and how must consent be structured when devices can log long streams of neural activity? Donoghue and others emphasise that current systems decode fairly specific control signals — not literal "mind reading" — but they also warn that improvements in analytics could extract more from the same measurements over time.

Security is an underappreciated risk. Any implant with wireless interfaces could be attacked, spoofed or exfiltrated if appropriate safeguards are not built in. The field has already seen ethically fraught experiments with animals — notably recent reports of neural implants used to nudge bird navigation — that underscore how neural interfaces can be repurposed outside medical contexts. Those non‑medical demonstrations sharpen the need for governance: rules that cover research use, domestic and military application, and commercial deployment.

Clinical risks remain acute as well. Surgery carries infection and bleeding risks; long‑term implants face tissue reaction, device failure and the possibility of loss of function if the system degrades. Regulators will therefore focus not only on efficacy but on the durability, safety margins and mechanisms for removing or upgrading implants.

When might consumer brain chips appear?

Predictions vary, but a practical distinction helps: therapeutic implants for severe disability are likely to reach approved, limited clinical use earlier than consumer products. The former have high immediate benefit and therefore a stronger case for tolerating invasive procedures. The latter would need implants that are demonstrably safe, simple to implant and remove, cost effective, and socially acceptable — a much tougher combination.

Non‑invasive brain‑computer interfaces — headsets that read surface EEG signals or use optical sensors — are already in consumer markets for simple tasks (gaming, attention tracking), and improvements there may trickle into daily life faster. Fully implantable, high‑bandwidth consumer chips that enable seamless control of devices remain speculative: most experts expect years to a decade or more, with timelines stretching further if policy and public debate slow deployment. In short, near‑term commercial availability is more likely for wearable, non‑surgical BCIs than for surgical implants marketed directly to general consumers.

Policy, regulation and the role of clinicians

Because implants sit at the intersection of medicine, consumer electronics and data science, they require hybrid oversight. Clinical trials will test safety and efficacy; ethical review boards must assess consent frameworks and long‑term follow‑up; and data‑protection regulators will need to classify neural data for custody, retention and permitted uses. Researchers and clinicians have called for proactive policy so that public debate, legal safeguards and technical standards keep pace with deployment rather than chase it after the fact.

That is precisely the argument Donoghue and others are making: reach for the therapeutic potential, but build governance now so communities, patients and clinicians are not left reacting to technological surprises later. The alternative is ad‑hoc choices that could erode public trust and slow the technology’s legitimate benefits.

For patients who have already lived with paralysis for years, a device that lets them send a message or move a cursor can change daily life. For society at large, the arrival of practical, implantable BCIs forces a rare combination of technical, legal and ethical work. The technology has matured to a point where those conversations can no longer be postponed.

Sources

- Brown University (BrainGate research)

- Neuralink (company reports and human trials)

- Duke University (bioethics commentary)

Comments

No comments yet. Be the first!