Decoding Trust: How Machine Learning Models Predict Human Comfort in Robot Collaboration

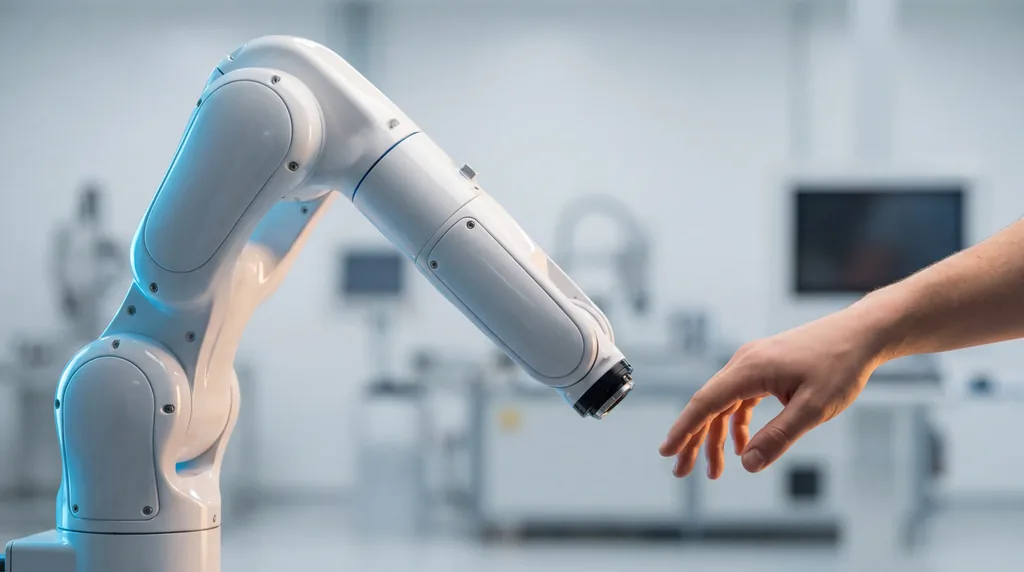

As Industry 5.0 shifts the focus toward human-centric manufacturing, the ability for robots to gauge human trust has become a critical safety requirement. Researchers from Aalborg University and the Istituto Italiano di Tecnologia (IIT) have developed a data-driven framework that allows machines to interpret behavioral cues, enabling them to adjust their movements and build rapport with human operators in real-time. By moving beyond traditional post-interaction surveys, the team has successfully demonstrated that machines can quantify the intangible quality of human trust through physical movement and interaction parameters, achieving high levels of predictive accuracy in high-stakes industrial environments.

The Evolution of Industry 5.0

The industrial landscape is currently undergoing a paradigm shift from Industry 4.0—which prioritized hyper-efficiency and mass automation—toward the more nuanced goals of Industry 5.0. This new era emphasizes human-centric manufacturing, where collaborative robots (cobots) are not merely tools, but partners working alongside human operators. This transition necessitates a deep focus on workplace safety, ergonomics, and psychological comfort. In a collaborative setting, "blind" automation is no longer sufficient; a robot that moves too quickly or too close to an operator can induce stress, lead to human error, or even cause physical harm.

Trust is the linchpin of this interaction. As defined by researchers in the field, trust is the belief that an agent will support a person’s goals, particularly in uncertain or vulnerable situations. In industrial settings, an imbalance of trust is dangerous: under-trust can lead to operator overload as workers micro-manage the machine, while over-trust can result in complacency and safety risks. To address this, the research team, including Giulio Campagna and Arash Ajoudani, sought to create a system that allows robots to sense these subtle psychological states and recalibrate their behavior accordingly.

The Mechanics of Trust Modeling

The core of the study, recently published in IEEE Robotics and Automation Letters, revolves around a novel data-driven framework designed to assess trust through behavioral indicators. At its heart is a Preference-Based Optimization (PBO) algorithm. Unlike traditional models that might guess a user's preferences, the PBO algorithm generates specific robot trajectories and then actively solicits operator feedback. This creates a feedback loop where the robot learns which movements—such as specific execution times or separation distances—are perceived as trustworthy by the human partner.

The research was conducted by a multidisciplinary team including Marta Lagomarsino and Marta Lorenzini from the Human-Robot Interfaces and Interaction Lab at IIT, alongside researchers from Aalborg University’s Human-Robot Interaction Lab. By quantifying subjective human feelings into mathematical interaction parameters, the team has bridged the gap between human psychology and robotic control. The framework optimizes three key variables: the robot’s execution time, the physical distance between the human and the machine, and the vertical proximity of the robot's end-effector to the user’s head.

Translating Behavior into Data

A significant challenge in robotics is the transition from qualitative observation to quantitative predictive modeling. To overcome this, the researchers identified specific behavioral indicators—derived from both the human’s body language and the robot’s own motion—that signal levels of trust. Human-related factors were tracked using whole-body motion capture, monitoring the head and upper body for signs of hesitation or comfort. These indicators were then paired with the robot’s motion characteristics to provide a holistic view of the collaboration.

By using explicit operator feedback as the "ground truth," the researchers trained machine learning models to recognize the physical signatures of trust. Lead author Giulio Campagna and his colleagues focused on the dynamic relationship between how a human moves in response to a robot’s path. This methodology allows the model to predict trust preferences even when the operator is not providing active feedback, turning silent physical behavior into a rich stream of data that informs the robot's next move.

Case Study: Chemical Industry Simulation

To test the framework in a realistic high-stakes environment, the team implemented a scenario involving the hazardous task of mixing chemicals. In this simulation, a robotic manipulator assisted a human operator in pouring and transporting chemical agents. This environment was chosen specifically because the potential for danger—and thus the necessity for trust—is inherently high. The experiment involved tracking the operator's whole-body movement while the robot performed varying trajectories based on the PBO algorithm's parameters.

The results were compelling. The research team evaluated several machine learning models, with the "Voting Classifier" achieving a standout accuracy rate of 84.07%. Perhaps more impressively, the model recorded an Area Under the ROC Curve (AUC-ROC) score of 0.90, a metric that indicates a high level of reliability and a strong ability to distinguish between different trust levels. These metrics suggest that the framework is not just guessing, but is accurately decoding the relationship between human motion and internal trust states.

Future Implications for Workplace Safety

The implications for this research extend far beyond the laboratory. By integrating real-time trust sensing into industrial robots, companies can significantly improve workplace safety. A robot that senses an operator’s discomfort can autonomously slow down its movements or increase its distance, reducing the operator’s cognitive load and stress. This creates a "closed-loop" system where the robot is constantly recalibrating its behavior to maintain the optimal level of trust, preventing the accidents that often stem from human-robot friction.

Furthermore, the use of SHAP (SHapley Additive exPlanations) values in the study adds a layer of explainability to the AI. This allows researchers to see exactly which behavioral indicators—such as the speed of a human's withdrawal or the angle of their head—most influence the trust score. As Arash Ajoudani and the team at IIT continue to refine these models, the focus will likely shift toward personalization. Future systems may be able to adapt to the unique personality traits and emotional responses of individual workers, fostering a more intuitive and resilient collaborative ecosystem. In the world of Industry 5.0, the most efficient robot may soon be the one that understands its human partner best.