In the evolving landscape of industrial automation, robots have demonstrated remarkable proficiency in performing repetitive, high-speed tasks guided by sophisticated computer vision. However, when faced with "contact-rich" scenarios—such as threading a thin wire into a connector or assembling delicate electronic components—even the most advanced visual systems often reach a plateau. These tasks require more than just sight; they demand a nuanced sense of touch and an understanding of physical resistance. To bridge this sensory gap, a research team led by Tailai Cheng, Fan Wu, and Kejia Chen has developed TacUMI, a multi-modal handheld interface designed to capture the intricate dance of force and tactile feedback during human demonstrations, providing a new blueprint for how robots might learn complex physical interactions.

The Limitations of Vision-Only Robotics

The fundamental challenge in modern robot learning lies in the "black box" of physical interaction. While current frameworks like Diffusion Policy and ACT have shown success in short-horizon tasks, they often treat a demonstration as a monolithic block of data. For complex, long-horizon tasks like cable mounting, visual observations and robot proprioceptive data—the internal sense of the robot's own limb position—are frequently insufficient. For instance, when a human operator stretches a cable to create tension before inserting it into a slot, the visual change might be negligible, yet the physical state of the task has transitioned significantly. Without the ability to "feel" this tension, a robot struggles to identify the transition between different stages of the operation, leading to failures in execution when the environment deviates even slightly from the training data.

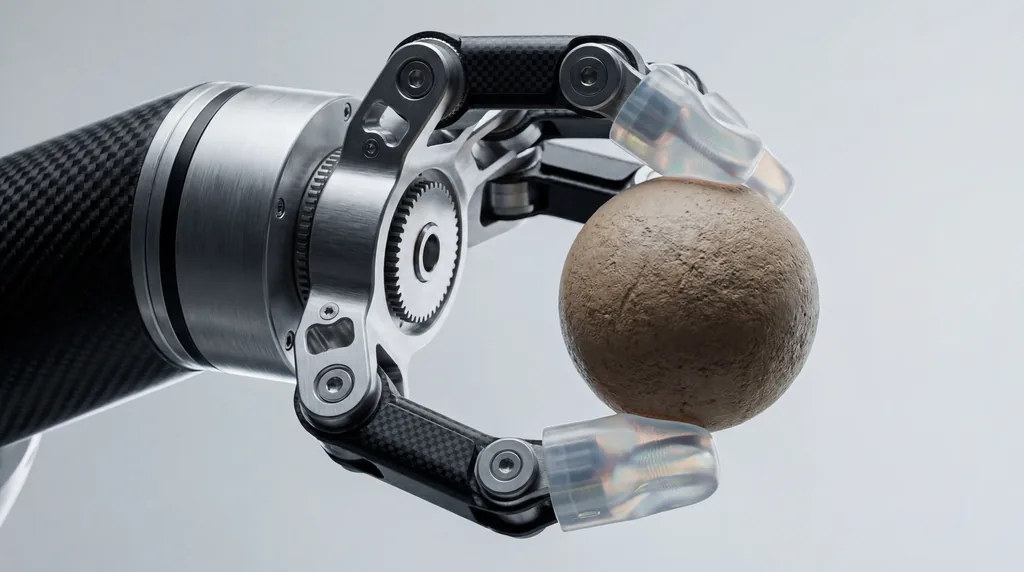

Introducing TacUMI: A Multi-Modal Breakthrough

Building upon the foundational Universal Manipulation Interface (UMI), the researchers from the Technical University of Munich, Agile Robots SE, and their partners at Nanjing and Shanghai Universities have introduced TacUMI. This system is a compact, robot-compatible gripper designed for high-fidelity data collection. Unlike its predecessors, which relied heavily on cameras and SLAM-based (Simultaneous Localization and Mapping) pose estimation, TacUMI integrates a suite of specialized sensors: ViTac sensors on the fingertips for high-resolution tactile mapping, a six-degree-of-freedom (6D) force-torque sensor at the wrist, and a high-precision 6D pose tracker. This ensemble allows for the synchronized acquisition of visual, force, and tactile modalities, creating a rich, multi-dimensional dataset of human dexterity.

Capturing the Human Touch

The hardware design of TacUMI is specifically engineered to eliminate the "noise" typically associated with handheld demonstration devices. One of the standout features is a continuously lockable jaw mechanism. In traditional handheld devices, the force exerted by the human operator to maintain a grip can interfere with the sensors' ability to record the actual interaction forces between the tool and the object. By allowing the operator to lock the gripper once an object is secured, TacUMI ensures that the force-torque sensors record only the clean interaction data of the task itself. This allows humans to demonstrate delicate tasks naturally, while the device captures the high-tension interactions—such as those found in deformable linear object (DLO) manipulation—without slippage or sensor contamination.

Semantic Segmentation and Task Decomposition

A core contribution of the research is the development of a multi-modal segmentation framework that utilizes temporal models, specifically a Bi-directional Long Short-Term Memory (BiLSTM) network. The goal of this framework is to decompose long-horizon demonstrations into semantically meaningful "skills" or modules. By processing the synchronized streams of tactile, force, and visual data, the model can detect event boundaries—the exact moment a cable is gripped, the moment tension is applied, and the moment it is successfully seated. This decomposition is critical for hierarchical learning, where a robot first learns individual motor skills and then learns a high-level coordinator to sequence them effectively, making the learning process more scalable and interpretable than end-to-end approaches.

Case Study: Mastering Delicate Electronics Assembly

To validate the efficacy of TacUMI, the researchers evaluated the system on a challenging cable mounting task, a staple of electronic assembly that remains difficult to automate. The experiment required the operator to pick up a cable, navigate a cluttered environment, create specific tension, and insert the connector into a precise housing. The results were striking: the system achieved more than 90 percent segmentation accuracy. Crucially, the research highlighted a remarkable improvement in performance as more modalities were added. While vision-only models often failed to distinguish between the "tensioning" and "inserting" phases, the inclusion of tactile and force data allowed the model to pinpoint transition boundaries with high precision, proving that multi-modal sensing is essential for understanding contact-rich tasks.

The Role of Multi-Institutional Collaboration

The development of TacUMI represents a significant collaboration across several prestigious institutions. Lead author Tailai Cheng, associated with both the Technical University of Munich and Agile Robots SE, worked alongside Kejia Chen, Lingyun Chen, and other colleagues to refine the hardware-software integration. The contributions of Fan Wu from Shanghai University and Zhenshan Bing from Nanjing University were instrumental in developing the algorithmic framework that allows the system to generalize across different data collection methods. Interestingly, the researchers demonstrated that a model trained on TacUMI-collected data could be deployed on datasets collected via traditional robotic teleoperation, achieving comparable accuracy and showcasing the system's versatility across different robotic embodiments.

Future Directions for Robot Learning from Demonstration

The success of the TacUMI interface opens several new avenues for the field of Robot Learning from Demonstration (LfD). By providing a practical foundation for the scalable collection of high-quality, multi-modal data, the system moves the needle closer to achieving human-like tactile sensitivity in autonomous systems. The researchers suggest that the next steps involve scaling TacUMI to even more diverse and unpredictable industrial applications, such as soft-material handling and complex multi-tool assembly. As robots move out of rigid factory settings and into more dynamic environments, the ability to "feel" their way through a task—facilitated by devices like TacUMI—will likely become as fundamental as the ability to see.

Implications for the Robotics Industry

For the broader robotics industry, TacUMI signals a shift away from the reliance on expensive, cumbersome teleoperation setups. By lowering the barrier to entry for collecting sophisticated tactile data, this handheld interface allows for more rapid iteration in robot training. In sectors like electronics manufacturing and household services, where the cost of failure is high and the complexity of tasks is immense, the ability to break down long-horizon actions into learnable, tactile-informed modules could drastically reduce the time required to deploy autonomous solutions. As Fan Wu and the research team note, the integration of these sensory modalities is not just a technical upgrade; it is a necessary evolution for robots meant to operate in a physical world defined by touch and resistance.